This past semester, I was the mentor for a six credit-hour immersive learning course in which my students developed an original educational video game, The Bone Wars, based on the historic feud of 19th-century paleontologists O. C. Marsh and E. D. Cope. The team consisted of eleven students (ten undergraduate and one graduate), and like last year, we had a dedicated studio space in which to work. We were working with colleagues at The Children's Museum of Indianapolis.

I did not write much publicly about the project during the semester, and this rather lengthy post is my project retrospective. In this post, I start by giving a little background, and then I go into more details on some of the themes of the semester. These reflect concepts that arose throughout the semester, in my own reflection, in conversations, in essays, and in formal team retrospectives. My primary goal in writing this essay is to better understand the past semester so that I can design better experiences in the future. Like any team, we had successes and we had failures. I may dwell more on the failures because these are places where I may be able to do things better in the future. I will also pepper in some pictures, so that if you don't want to read the whole thing (and I don't blame you), you can at least enjoy the pictures.

|

| The original game logo, now a banner on the team's blog. |

Background

This project was internally funded by the university's initiative for immersive learning. I have led many immersive learning experiences, but the most ambitious and most successful one was undoubtedly my semester at the Virginia Ball Center for Creative Inquiry, where I worked with fourteen students for an entire semester on a dedicated project. That was in Spring 2012, and since then, I have had two "Spring Studio" courses—six-credit immersive experiences designed explicitly to bring ideas from the VBC to the main campus. I have recently received approval to offer another in Spring 2015, and hence my desire to deeply reflect on the past semester's experience.Immersive learning, by definition, is to be "student-centered and faculty-mentored." This creates a tension in my participation: I want plans, ideas, and goals to emerge from the students with my guidance. Yet, college students generally have had very little leadership experience or real authority. Indeed, most of them have spent the last fifteen years in an educational system that minimizes agency, that is designed to produce obedient workers rather than ambitious creatives. This can result in a leadership vacuum on immersive learning projects, which then manifests as a stressful pedagogic question, "Do I step in and fix this for them, or do I let them fail and hope they learn from it?" I mention this here because this tension will emerge as a theme in other parts of the essay. Despite having led many immersive learning projects, I still struggle with this.

|

| The illustration from the game's loading screen subtly establishes that Marsh is brown and on the left, while Cope is blue and on the right. |

Prototyping

We started the semester with a few themes from The Children's Museum. After the first week's introduction to educational game design, each of us wrote a concept document for a game, choosing from among the set of themes. The choice to go with The Bone Wars was unanimous.Once we decided on the theme, I asked each student to choose a concept document and create a physical prototype of the game. I made one also, in part so that I could model the process. The students had the option of presenting theirs to the team as contenders for the final game, and about half chose to do so. However, none of these that were presented were actually prototypes at all: they were ideas or sketches, but they were not playable, and hence they could not be evaluated on their own merits. It seemed that, despite the team having read a few articles on rapid prototyping (including the classic How to Prototype a Game in Under 7 Days) and game design, they had not really understood what it meant—or, at least, those who presented their ideas as contenders did not understand this.

This opens up a puzzle that I have not been able to solve. Half of the team did not present their designs as contenders. Were theirs actually prototypes? Did the other half of the class understand the readings, make playable prototypes, evaluate them, and then throw them away because they were not great? I hope that this is true, because then it was simply my failure to make then show me what they had, so I could explain to them that the first prototype is always thrown away. If not, however, then maybe the whole team did not understand, from the beginning, that game design is hard.

In any case, my prototype—which was created just to show them how it could be done—was the only one that was really a prototype at all. Half of the team members wanted to do another round of open prototyping, while the other half argued for moving forward into production with my prototype. My recollection is that the people in the former group were the ones who presented non-prototypes as contenders, but this could be wrong. Many in the latter group were coming from my game programming class in the Fall, where they barely got games working in a three credit-hour experience. I think it was on the strength of their argument that the team voted to move forward with my prototype.

|

| A prototype in the studio, task board in the back. |

As they had in the first iteration, the team had continued to report progress and claim late night design sessions. I had been distracted by the digital prototyping team, helping with difficult software architecture issues, and so I had taken it on faith that they had made progress. However, when I finally sat down with them and asked to see what there was, it was practically nothing. There had been a little incremental refinement, but nothing near the divergent prototyping that was required. Checking the clock, I gave them a fifteen-minute design challenge: take an idea that had been discussed, modify the existing prototype with this, and have it ready to test. The educational goal behind this intervention was not to produce quality design artifacts, of course, but to have them get a feel for rapid prototyping, to understand what it means to fail fast. My intention was to work alongside them, show them how in fifteen minutes I could make something, evaluate it, and determine what to do with it. I think I had two words written on an index card when I was called away by the digital prototyping squad with a legitimate software design problem. When I returned to the other squad almost twenty minutes later and asked to see what they had, they had nothing. They had chatted about game design ideas—again—rather than making anything that could be evaluated.

Two thoughts blazed through my mind. The first was, these students simply do not understand prototyping. The second was, if we don't fix this game design, we're up a creek. I gave them the challenge to make divergent prototypes and have them ready to evaluate within the next 24 hours. I got out my trusty index cards and spent an hour designing a different set of rules around some of the same game ideas—in part, again, to model the process, but also with the thought that we could have something decent if nobody else had anything. At the next meeting, I presented my new prototype. I explained how I had taken the ideas from the first prototype, as well as the playtesting results, research notes, and team ideas, and turned these into something new. The team seemed to like it, and I had hoped this would inspire them, create a spark of understanding for their own prototypes. However, they liked my revision so much, it became "the divergent prototype," and that's basically what you see in our final product. Note that their use of "divergent" here indicates that there was still a fundamental misunderstanding of the rapid prototyping process, but at this point, I internally declared it a lost cause: there was about a semester's worth of work to do and about half a semester to do it.

The game we built over the course of the next six weeks was essentially unchanged from the prototype I whipped up on some index cards: a two-player worker-placement game. It could have used some refinement, but there was no time for it.

|

| An interesting shot of our whiteboard, with UI design ideas sitting on top of sprint retrospective notes. |

Integration

One of the most important successes of the semester was getting our artists onto the version control system. With previous teams, we had awkward manual workflows for getting art and music assets into the game. This semester, however, we were able to teach one of the artists how to use TortoiseHg to mediate the pull-update-commit-merge-push process. Now, when a request came in to clean up an asset, or if there was something he saw that needed change, he could just make it happen. There was one morning session where he and I worked together on the fossil widgets, and it was smooth as silk to have me laying down code while he was cleaning up and producing assets. As great a success as this was, I cannot help but wonder, if we had this kind of workflow earlier, could we have showcased more of the artists' work?

Unfortunately, the audio assets did not have the same success. The composer had something wrong with his computer that prevented us from getting a Mercurial client to work (something systemic—it wasn't the only thing broken, and I suspect he needed a complete wipe). The quality of the music is undeniable, but up until the week before we shipped, there was only one song and a handful of sound effects in the game. Right before the end the of the project, the composer worked with a programmer, and they put all of his songs into the game, about 30 minutes of music for a ten-minute game. This is the main reason the game takes so long to download: some of the pop-up dialog boxes have 3-minute soundtracks! The root problem was the failure to rapidly integrate and test. If the music had been integrated even a few days before shipping, we would have noticed the spike in project size and remixed the short-lived songs and re-record some of the inarticulate voicework. While the visual assets underwent significant change and improvement during the last six weeks of production, the audio missed this opportunity.

|

| The artists weren't just good at drawing, they were both quite witty. Sadly, the final game only offers hints of both. |

Our software architecture involved model-view separation, with the model written using Test-Driven Development. TDD has been a boon to some of my other projects for dealing with the intricacies of game logic. After the first two sprints, however, we had a mess on our hands: the testing code was cumbersome, and bits of logic had been leaked into the view. After the second sprint, when we completely overhauled the core gameplay, it was an opportunity to throw away all that we had and start again—and that's what we did. The revised architecture made more prudent use of the functional-reactive paradigm via the React library, and I took the reins on the UI code, laying the groundwork for that layer.

However, the team continued to develop both the model and the view in separate, parallel layers, rather than rapidly integrating across the two. Integration was difficult and therefore not done. I paired up with some students to demonstrate a more effective model: picking a feature, then writing just enough of the model that I could add a piece to the UI—a vertical slice through the system, rather than parallel development of separate layers. I wanted this to be a major learning outcome for the students, particularly the Computer Science majors, but I doubt that most of them understood this. Even at the time, I recognized that I did a lot of the typing, when I could have stepped back and let them build an understanding by taking small steps. However, I also felt like I knew how much more work there was to be done, and they did not, and so I chose the path that built momentum toward completion.

I believe that these decisions were justified. Part of my design for the semester was inspired by research on situated learning and communities of practice, which show that apprentices learn best in a community when they are centered on the practices of master. By working with the students on some of these challenging design and development problems, I wanted to model not just a solution, but a mindset. However, I observed that the students seemed to bifurcate into two groups: a group who watched intently, asked questions, and copied what I did; and those who daydreamed, wandered away, and ended up contributing almost nothing to the project.

|

| When a student was trying to fill up the poster, he asked what else to include. I suggested, "Game development is hard." It was meant as a joke, but it looks pretty good there. |

One place where I saw a great success of integration was in the addition of cheat codes. I had explained to the team that these "cheat codes" were really tools of quality assurance, allowing testers to create specific game scenarios and verify expected behavior. This idea was bandied about for a few days without any activity, either because they didn't feel the need for it yet or they didn't know how to move forward. After I added a cheat-code-listener to manipulate a player's funds, I showed a few students how it worked. Fairly quickly, they became adept at adding new codes to test features. When it worked best, this showed how they understood how to break down a feature into vertical slices, embracing the behavior that I had modeled for them.

A final perspective on the theme of integration. One of the lessons learned from the Spring 2013 Studio was that I needed to schedule formal meeting times. Hence, this semester, everyone had to be available MWF at 9AM for stand-up meetings. This got around one of the problems from last year, where we had "the morning group" and "the afternoon group," which of course led to communication problems and trust breakdowns. Our three collocated hours a week were still not enough, and I never expected them to be. The plan was to use these hours as a seed and then find additional collocated times to meet; however, what happened was that those three hours became the only time each week that everyone was together.

I think the team set up bad habits from the first week of the semester, when we were doing reading and design exercises together. I asked them to do these in the studio, so they could talk about them, share results, compare analyses, and playtest each others' designs. However, I don't think this happened: they scattered to the winds, as if this was any other course rather than a collaborative studio. Then, when we were in production, I reminded them of the need to prioritize collocated studio time over extracurricular obligations. However, these mandates (which indeed they were, from the ground rules to which everyone agreed) were treated as casual requests. It was worse than falling on deaf ears—it was insubordination. This damaged not just the team's communication and collaboration patterns, but it also damaged my trust in them.

I hosted a few social events for the team, but there was very little participation. Some team members brought up the need for more teambuilding and social events during retrospectives, but no one else seemed to act on these ideas. As a result, I think the most significant failure was that the team itself did not integrate.

I think the team set up bad habits from the first week of the semester, when we were doing reading and design exercises together. I asked them to do these in the studio, so they could talk about them, share results, compare analyses, and playtest each others' designs. However, I don't think this happened: they scattered to the winds, as if this was any other course rather than a collaborative studio. Then, when we were in production, I reminded them of the need to prioritize collocated studio time over extracurricular obligations. However, these mandates (which indeed they were, from the ground rules to which everyone agreed) were treated as casual requests. It was worse than falling on deaf ears—it was insubordination. This damaged not just the team's communication and collaboration patterns, but it also damaged my trust in them.

I hosted a few social events for the team, but there was very little participation. Some team members brought up the need for more teambuilding and social events during retrospectives, but no one else seemed to act on these ideas. As a result, I think the most significant failure was that the team itself did not integrate.

|

| A partial wireframe for the final game UI. |

Usability

The game, as delivered, is almost completely unlearnable: if you downloaded it, it would be very unlikely that you would figure out how to play, much less how to play well. What fascinates me is that the team didn't seem to recognize this. I understand that when you are close to a project, it can be difficult to step outside oneself and see it critically, as an outsider would. Perhaps I took it for granted that my students generally understood that some games had good experience design and some had bad, and how feedback in particular is a hallmark of good design.

Here is an anecdote to demonstrate this lesson. For weeks, I pointed out to the team that there was no indication of whose turn it is. The team knew the rules for whose turn it was: Marsh goes first on odd rounds, players alternate turns. However, there was literally nothing in the game to reflect this. The inaction regarding this defect started making me wonder if the team was ignoring it or if they disagreed that it was a problem. Then, I was in the studio one day when a student turned to me excitedly and told me how he was testing a feature and had to look away from his screen. When he looked back, he could not tell whose turn it was. It was just as I had said, he confirmed, and we should do something about it. When I pressed him to reflect on why it took him so long to realize that this was a problem, he responded that he was too close to the project, he wasn't in the habit of looking at it from the player's point of view.

This defect was addressed, albeit inelegantly, but the game still suffers from a woeful lack of both feedback and feedforward. Before taking actions, it's not clear what they will do; after taking actions, it's not always clear they did. This leads us to the next theme...

|

| The "Fossil Hunter" dialog box, which I designed, is one of the most often misunderstood screens in the game. |

Finishing

In our final meeting, it was clear that the students were proud of themselves for having completed a potentially shippable product. However, this had been the goal every sprint: produce a potentially shippable product that we can show to stakeholders and playtest with the target audience. Yes, the team deserved some pride for having finished one, but it's not clear to me that the team, as a whole, recognized that the game itself was essentially unfinished. The ideal situation was that they would have completed a potentially shippable product each sprint and learned to critically analyze it, and the next sprint would be spent improving it. The real situation is that they felt a bit of joy, and relief, at having completed a big-bang integration after fifteen weeks of effort.

I am sad about this because it is another educational opportunity lost. I am not confident that the students can look at the product they completed and clearly articulate where it is strong and where it is weak, or develop any kind of coherent plan for finishing it. Perhaps in another sprint, this could have been accomplished, and there's definitely at least three weeks of work that could be done polishing the game. However, that's not a sprint we have. I am hopeful that some team members recognize this, but it's only hope.

Community

For the first time, I had a team member dedicated to community outreach. This is something I had always wanted since working on immersive learning projects, but I had not previously been able to recruit one. His work resulted in the team blog as well as the Twitter handle. He also put together a series of podcasts for the blog that tell some of the history of Marsh and Cope.

His contributions were excellent, and it gives me some ideas for how I might work with such a student in the future. It would be worth investing more effort in getting the attention of serious games networks, because this could result in both dissemination and free expert evaluation. Despite having the blog and Twitter accounts, the game was really developed behind closed doors. Indeed, much of this is due to the failure of integration, but if we got over that hurdle in a future semester, we could be much more bold in promoting the potentially shippable products themselves. Similarly, although we ostensibly worked with The Children's Museum, this didn't manifest in our outreach efforts.

This student—like many others—also pigeonholed himself into his comfort zone, but that's contrary to the values of agile software development. The team saw him as an "other," and he didn't appear at home in the studio space after the physical prototyping ended. He produced the podcasts in part because the game did not capture the historical narrative of The Bone Wars well, and so they were designed as ancillary artifacts. What if, instead, he had worked more closely with the team to explore these in still screens or animatics? As mentioned above, it was very common for students to wall themselves in by their disciplinary background, but when engaged in interdisciplinary activity, I believe it is necessary to break down these walls. I had never worked with a student with these communication production skills before, and so I think I did not recognize what his walls would look like. I am hopeful that, from his positive experience and contributions, I can continue to recruit one or two similar team members for future projects.

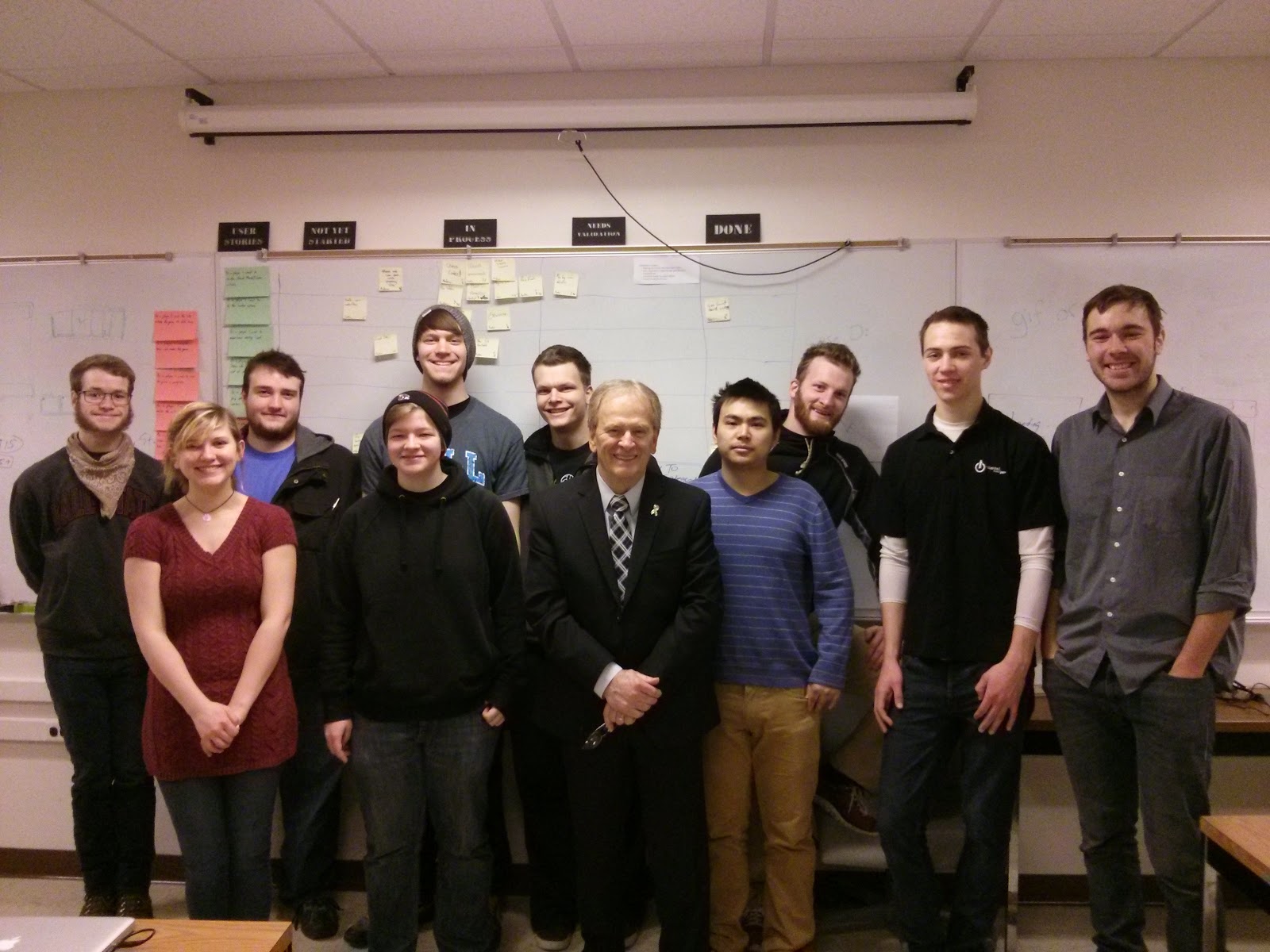

|

| Our visit from Muncie Mayor Dennis Tyler was due to the efforts of our social media manager. |

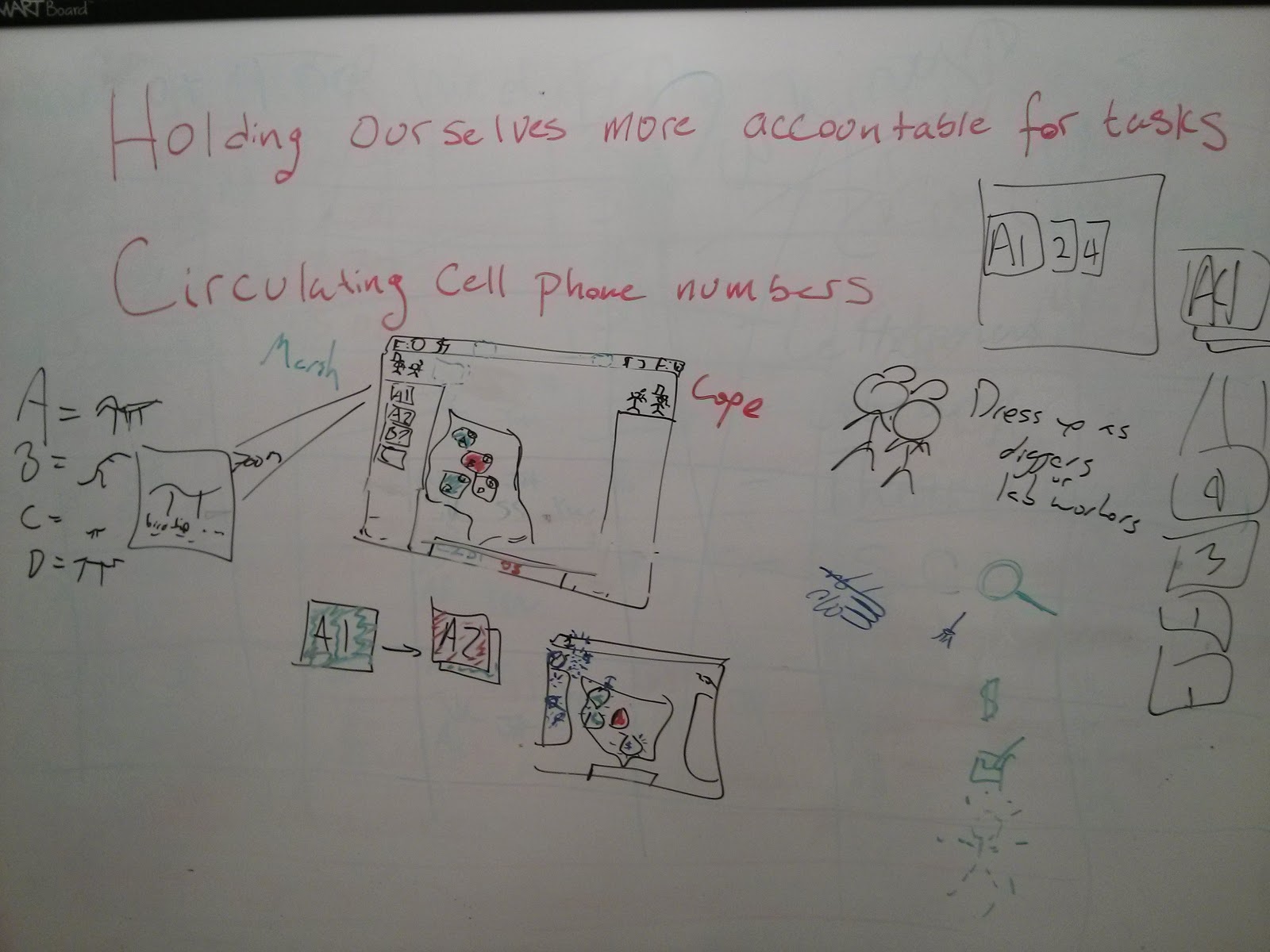

Accountability

In the Spring 2013 Studio, I used a student assessment model borrowed from industry: a mid-semester performance evaluation, and an end-of-semester performance evaluation. Basically, the model was, "Do honest work, and trust me to give you a fair grade." While this worked well in most cases, it was not perfect. One student had contributed almost nothing all semester, but because of the collaborative nature of the studio, I felt that I could not give him as low a grade as he deserved. If it he had been appealed, I would have had very little evidence. To guard against this, and to encourage academic reflection, I used more formal evaluations this semester. At the end of each sprint, I was to assign students participation scores (0-3 points) based on how well they kept their commitments. Each student also wrote an individual reflection essay (0-3 points). However, this system may have proved to be worse—for me, anyway.

In the pre-production sprint, I gave everybody full credit and some formative feedback because I thought that they had all kept their commitments. Now, I am not so sure. It seems to me that if the team had really read and thought about the readings assigned that first week, some of those ideas would have shown up in the rest of the semester, but they didn't—not in reflections, not in essays, not in conversations, and not in practice. I noted this in the first production sprint, and so I changed the format slightly from my assigning participation scores into a survey question: did you keep your time commitment to the project? This is where things really broke down for me. Many students claimed to have kept all their commitments, putting in their 18 hours per week of meaningful effort into the project—and I did not believe them. I think they lied to me, willingly and knowingly, to get a good grade. The survey was conducted via Blackboard, and in my responses, I said as much to them. I told them what I thought their participation was, and what grade I was recording for them... and nobody pushed back. Nobody justified their original response, nobody provided evidence. This silence has to be taken as admission of guilt.

And it didn't just happen the first sprint.

This experience left a very bad taste in my mouth. That metaphor is not quite right... it left a weight on my heart. It's hard to listen to a stand-up report, knowing that several members of the team are content to lie to you as long as they don't get caught. I don't know if these students recognized what a burden it was on me. I don't know if they have any experience, any guilt, that would indicate to them how hard it is to regain trust. I suspect not, because as far as I could tell, no one seemed to try. Some did ramp up their efforts, but only to the minimum level to which they were originally committed, and some not even that much.

This leaves me with a difficult design problem: do I return to ad hoc assessment, knowing that some people may sneak by me, or do I use more rigorous assessment, and open myself to the heartache of having students lie to me for something as inconsequential as a grade?

Incidentally, I laid some of this on the table for the students in our final meeting, which was the last day of finals week. I'm afraid I may have been a bit of a downer, but I wanted them to understand that betraying my trust was not just a professional blunder, but that it actually hurt me personally. No one really responded to this, and there weren't any more reflection essays. I wonder what they think, and I hope they learned from it. The team's risks are academic, not economic. I realize that my risks tend to be emotional.

Closing Thoughts

I am faced with a conundrum: what happens to The Bone Wars next? I feel a great sense of ownership over the project: it is my design, and I wrote a large portion of the code. As it is, however, it's almost unplayable because of the lack of user experience design. It would only take a few days to polish it, and it would not require any new assets. This would make it ready for presentation at conferences and competitions, and it would make it a piece that I personally can be proud of. If I did this, however, would it still be the result of a "student-centered and faculty-mentored" experience? Does that matter, once the semester is over and credit is assigned? I am honestly not sure, but the truth is that I have already made some modifications. Version 0.4, released by the team, contained some embarrassing typographic errors, again due to a failure to integrate early. I quickly patched it and re-released it as 0.5. I also have a version 0.6 that I've been tinkering with, a bit with a student but mostly by myself. This version is already much nicer than 0.5, but I am not certain what its future is.

The team had very little interaction with our community partner. We had one meeting with them, toward the end of the semester. It was extremely useful and provided a lot of direction, but only three team members were able to attend. I had positioned myself as a liaison between the team and The Children's Museum for two reasons: first, I knew that they were dealing with serious production stress so I did not want to bother them unnecessarily; second, I have seen students flub these community relationships, and I didn't want to damage ours. I need to consider whether or not this was a mistake. The easier fix, however, would be to arrange meetings ahead of time, far enough in advance that the team feels the pressure of having something playable to show them.

I know I need to do something more intentional with the scheduling. The past two years, I have given preference to getting the best people even if we don't have the best schedule. Now, I think this was a mistake. If the best people are getting together at odd times—or not getting together at all—then their efforts will be fruitless. If I was dealing only with Computer Science majors, it would be easy, since I know and can even influence our course schedule. However, every other major added to the mix increases the complexity. The most difficult to work with are the artists, who have significant studio obligations, but they are also the most critical for the kind of games that interest me. Right now, it's still an open problem, although I certainly need to have something more formal in place for next Spring.

The game design and UI design issues are tricky ones, but again, I think the past two years point me in the right direction. I have tried to model these six-credit experiences after my 15-credit VBC experience, but even there, the students barely finished their project on time. Prefacing the production with a "crash course in game design" has not been fruitful: game design is too difficult for the students to do well with such a tight timetable. I think that next year, I will have to have the critical design elements done ahead of time. Right now, I am leaning toward doing the design myself, working with my partners at the Children's Museum, and using that as a case study in Fall's Serious Game Design colloquium and as a starter specification in the Spring. This would allow the next crop of Spring Studio students to focus more on the problems of production and, hopefully, get a better idea of what it means to make something really shippable.

The game design and UI design issues are tricky ones, but again, I think the past two years point me in the right direction. I have tried to model these six-credit experiences after my 15-credit VBC experience, but even there, the students barely finished their project on time. Prefacing the production with a "crash course in game design" has not been fruitful: game design is too difficult for the students to do well with such a tight timetable. I think that next year, I will have to have the critical design elements done ahead of time. Right now, I am leaning toward doing the design myself, working with my partners at the Children's Museum, and using that as a case study in Fall's Serious Game Design colloquium and as a starter specification in the Spring. This would allow the next crop of Spring Studio students to focus more on the problems of production and, hopefully, get a better idea of what it means to make something really shippable.

In a hallway conversation with one of the students, I articulated a taxonomy that I used for personal, informal assessment of learning experience design. It looks something like this:

- What succeeded because of my designs?

- What succeeded despite my designs?

- What succeeded regardless of my designs?

- What failed because of my action or inaction?

- What failed despite my action or inaction?

- What failed regardless of my action or inaction?

I still don't have clear answers to many of these questions. The good news, however, is that this immersive learning experience was also the subject of a rigorous study by a doctoral candidate in the English department. He has been interviewing students and gathering evidence to understand the students' lived experience, focusing primarily on writing, activity theory, and genre research. I hope that the study will shed some light into the areas that were hidden from me, and that I can use this to design even better experiences.

I know that many of my Spring 2014 students had a rewarding learning experience, and I hope they carry the lessons with them for a long time. There is a subset of the team in whom I am very proud—students who learned what it meant to take initiative, to make meaningful contributions, to learn from mistakes, to recognize weaknesses, to see the value of collaboration. To those of you who read this, I hope that this essay helps you understand my perspective a bit more, and of course I welcome your comments. As I said in the introduction, the intended outcome of this essay is that I can design even better experiences in the future.

I know that many of my Spring 2014 students had a rewarding learning experience, and I hope they carry the lessons with them for a long time. There is a subset of the team in whom I am very proud—students who learned what it meant to take initiative, to make meaningful contributions, to learn from mistakes, to recognize weaknesses, to see the value of collaboration. To those of you who read this, I hope that this essay helps you understand my perspective a bit more, and of course I welcome your comments. As I said in the introduction, the intended outcome of this essay is that I can design even better experiences in the future.

Oh, this sounds so much like my semester. I have run client driven projects in my software engineering course several times, but this time around was a bit of a disaster, and many of the problems are reflected in this blog posting. I share the same heaviness of spirit as I sit here, writing a project summary for our client. I would love to talk more with you about this - I am deeply interested in what happens in student teams while working on large projects

ReplyDeleteI'd be glad to share some of the joys and sorrows. It is a great way to lead learning experiences, and I know my students get a lot out of it, and the experience stays with them.

DeleteI'm currently working on a manuscript that brings together some of my experiences in a more formal way. I will definitely let you know how that piece ends up.